Quicksearch

Your search for kurtz returned 21 results:

Monday, January 2. 2012

Public Access to Federally Funded Research (Harnad Response to US OSTP RFI) (Part 2 of 2)

[Part 2: see also Part 1)]

QUESTION 8 (8a, 8b):

QUESTION 8 (8a, 8b):

(Question 8a) “What is the appropriate embargo period after publication before the public is granted free access to the full content of peer-reviewed scholarly publications resulting from federally funded research?”

There is no real reason any would-be user should ever be denied access to publicly funded research journal articles. Over 60% of journals (and virtually all the top journals) already endorse immediate green OA to the author’s final draft.

But if federal funding agencies wish to accommodate the <40% of journals that do not yet endorse immediate green OA, an embargo period (preferably no longer than 6 months) could be allowed.

The crucial thing, however, is that the embargo should not apply to the date at which deposit of the author’s final, peer-reviewed draft in the author’s institutional repository is required. That deposit should be done immediately upon acceptance for publication, for all articles, without exception.

The allowable OA embargo should apply only to whether access to the immediate-deposit is made OA immediately, or access is instead set as “Closed Access” during the allowable embargo period.

(Question 8b) “Please describe the empirical basis for the recommended embargo period.”

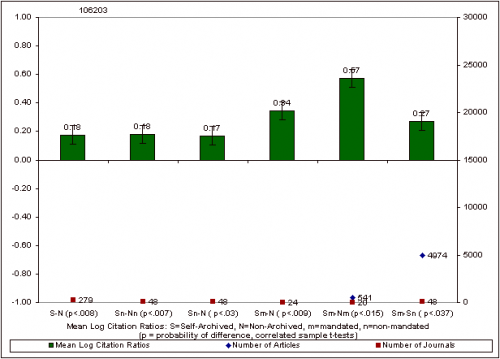

The many empirical studies that have – in every research field tested – repeatedly demonstrated the research impact advantage (in terms of both downloads and citations) of journal articles that have been made (green) OA, compared to articles in the same journal and year that have not been made OA, have also found that the OA impact advantage is greater (and, of course, comes earlier) the earlier the article is made OA. The advantage of early OA extends also to preprints made OA even before peer review. Delayed access means not only delayed impact but also lost impact, in areas of research where it is important to strike while the iron is hot. See especially the findings of the Harvard astrophysicist, Michael Kurtz in:

EXTRA QUESTIONS (X1, X2, X3):

Question X1. “Analyses that weigh public and private benefits and account for external market factors, such as competition, price changes, library budgets, and other factors, will be particularly useful.”

Please see the careful comparative economic analyses of John Joughton and co-workers (Figure 1):

Question X2. “Are there evidence-based arguments that can be made that the delay period should be different for specific disciplines or types of publications?”

The optimal OA delay period is zero: the research reported in peer-reviewed journal/conference articles should be accessible to all potential users immediately upon acceptance for publication, in all disciplines. There is no real reason any would-be user should ever be denied access to publicly funded research journal articles. Over 60% of journals (and virtually all the top journals) already endorse immediate green OA to the author’s final draft.

But if federal funding agencies wish to accommodate the <40% of journals that do not yet endorse immediate green OA, an embargo period (preferably no longer than 6 months) could be allowed.

The crucial thing, however, is that the embargo should not apply to the date at which deposit of the author’s final, peer-reviewed draft in the author’s institutional repository is required. That deposit should be done immediately upon acceptance for publication, for all articles, without exception.

The allowable OA embargo should apply only to whether access to the immediate-deposit is made OA immediately, or access is instead set as “Closed Access” during the allowable embargo period.

Question X3. “Please identify any other items the Task Force might consider for Federal policies related to public access to peer-reviewed scholarly publications resulting from federally supported research.”

If Federal funding agencies mandate green OA self-archiving of the fundee’s final draft of all peer-reviewed journal articles resulting from federally funded research, deposited in the fundee’s institutional repository immediately upon acceptance for publication (ID/OA mandate), this will not only generate 100% OA for all US federally funded research, but it will inspire funders as well as universities and research institutions worldwide to follow the US’s model, reciprocating with OA mandates of their own, thereby ushering in the era of open access to all research, worldwide, in all fields, funded and unfunded (see mandate growth curve from ROARMAP (Registry of Open Access Mandatory Archiving Policies), Figure 2).

QUESTION 8 (8a, 8b):

QUESTION 8 (8a, 8b):(Question 8a) “What is the appropriate embargo period after publication before the public is granted free access to the full content of peer-reviewed scholarly publications resulting from federally funded research?”

There is no real reason any would-be user should ever be denied access to publicly funded research journal articles. Over 60% of journals (and virtually all the top journals) already endorse immediate green OA to the author’s final draft.

But if federal funding agencies wish to accommodate the <40% of journals that do not yet endorse immediate green OA, an embargo period (preferably no longer than 6 months) could be allowed.

The crucial thing, however, is that the embargo should not apply to the date at which deposit of the author’s final, peer-reviewed draft in the author’s institutional repository is required. That deposit should be done immediately upon acceptance for publication, for all articles, without exception.

The allowable OA embargo should apply only to whether access to the immediate-deposit is made OA immediately, or access is instead set as “Closed Access” during the allowable embargo period.

Harnad, S. (2006) The Immediate-Deposit/Optional-Access (ID/OA) Mandate: Rationale and Model Open Access ArchivangelismSUMMARY: Universities and research funders are both invited to use this document. Note that this recommended "Immediate-Deposit & Optional-Access" (IDOA) policy model (also called the "Dual Deposit/Release Strategy") has been specifically formulated to be immune from any delays or embargoes (based on publisher policy or copyright restrictions): The deposit -- of the author's final, peer-reviewed draft of all journal articles, in the author's own Institutional Repository (IR) -- is required immediately upon acceptance for publication, with no delays or exceptions. But whether access to that deposit is immediately set to Open Access or provisionally set to Closed Access (with only the metadata, but not the full-text, accessible webwide) is left up to the author, with only a strong recommendation to set access as Open Access as soon as possible (immediately wherever possible, and otherwise preferably with a maximal embargo cap at 6 months).

This IDOA policy is greatly preferable to, and far more effective than a policy that allows delayed deposit (embargo) or opt-out as determined by publisher policy or copyright restrictions. The restrictions apply only to the access-setting, not to the deposit, which must be immediate. Closed Access deposit is purely an institution-internal book-keeping matter, with the institution's own assets, and no publisher policy or copyright restriction applies to it.

[In the meanwhile, if there needs to be an embargo period, the IR software has a semi-automated EMAIL EPRINT REQUEST button that allows any would-be user to request (by entering their email address and clicking) and then allows any author to provide (by simply clicking on a URL that appears in the eprint request received by email) a single copy of the deposited draft, by email, on an individual basis (a practice that falls fully under Fair Use). ). This provides almost-immediate, almost-Open Access to tide over research usage needs during any Closed Access period.]

(Question 8b) “Please describe the empirical basis for the recommended embargo period.”

The many empirical studies that have – in every research field tested – repeatedly demonstrated the research impact advantage (in terms of both downloads and citations) of journal articles that have been made (green) OA, compared to articles in the same journal and year that have not been made OA, have also found that the OA impact advantage is greater (and, of course, comes earlier) the earlier the article is made OA. The advantage of early OA extends also to preprints made OA even before peer review. Delayed access means not only delayed impact but also lost impact, in areas of research where it is important to strike while the iron is hot. See especially the findings of the Harvard astrophysicist, Michael Kurtz in:

Bibliography of Findings on the Open Access Impact AdvantageThe optimal OA embargo period is zero: peer-reviewed research findings should be accessible to all potential users immediately upon acceptance for publication. Studies have repeatedly shown that both denying and delaying access diminish research uptake and impact. Nor does delayed access just mean delayed impact: Especially in rapid-turnaround research areas (e.g. in areas of physics and biology) delaying access can mean permanent impact loss (see Figure 6):

Gentil-Beccot A, Mele S, Brooks T.C. (2010) Citing and reading behaviours in high-energy physics. Scientometrics 84(2):345–55

EXTRA QUESTIONS (X1, X2, X3):

Question X1. “Analyses that weigh public and private benefits and account for external market factors, such as competition, price changes, library budgets, and other factors, will be particularly useful.”

Please see the careful comparative economic analyses of John Joughton and co-workers (Figure 1):

Houghton, J.W. & Oppenheim, C. (2009) The Economic Implications of Alternative Publishing Models. Prometheus 26(1): 41-54

Houghton, J.W., Rasmussen, B., Sheehan, P.J., Oppenheim, C., Morris, A., Creaser, C., Greenwood, H., Summers, M. and Gourlay, A. (2009). Economic Implications of Alternative Scholarly Publishing Models: Exploring the Costs and Benefits, London and Bristol: The Joint Information Systems Committee (JISC)

Houghton, J.W. and Sheehan, P. (2009) Estimating the potential impacts of open access to research findings, Economic Analysis and Policy, vol. 39, no. 1, pp. 127-142.

Harnad, S. (2010) The Immediate Practical Implication of the Houghton Report: Provide Green Open Access Now. Prometheus 28 (1): 55-59

Question X2. “Are there evidence-based arguments that can be made that the delay period should be different for specific disciplines or types of publications?”

The optimal OA delay period is zero: the research reported in peer-reviewed journal/conference articles should be accessible to all potential users immediately upon acceptance for publication, in all disciplines. There is no real reason any would-be user should ever be denied access to publicly funded research journal articles. Over 60% of journals (and virtually all the top journals) already endorse immediate green OA to the author’s final draft.

But if federal funding agencies wish to accommodate the <40% of journals that do not yet endorse immediate green OA, an embargo period (preferably no longer than 6 months) could be allowed.

The crucial thing, however, is that the embargo should not apply to the date at which deposit of the author’s final, peer-reviewed draft in the author’s institutional repository is required. That deposit should be done immediately upon acceptance for publication, for all articles, without exception.

The allowable OA embargo should apply only to whether access to the immediate-deposit is made OA immediately, or access is instead set as “Closed Access” during the allowable embargo period.

Question X3. “Please identify any other items the Task Force might consider for Federal policies related to public access to peer-reviewed scholarly publications resulting from federally supported research.”

If Federal funding agencies mandate green OA self-archiving of the fundee’s final draft of all peer-reviewed journal articles resulting from federally funded research, deposited in the fundee’s institutional repository immediately upon acceptance for publication (ID/OA mandate), this will not only generate 100% OA for all US federally funded research, but it will inspire funders as well as universities and research institutions worldwide to follow the US’s model, reciprocating with OA mandates of their own, thereby ushering in the era of open access to all research, worldwide, in all fields, funded and unfunded (see mandate growth curve from ROARMAP (Registry of Open Access Mandatory Archiving Policies), Figure 2).

Monday, December 19. 2011

American Scientist Open Access Forum Has Migrated to GOAL (Global Open Access List)

The straw poll on whether or not to continue the American Scientist Open Access (AmSci) Forum (and if so, who should be the new moderator) is complete (the full results are reproduced at the end of this message).

The straw poll on whether or not to continue the American Scientist Open Access (AmSci) Forum (and if so, who should be the new moderator) is complete (the full results are reproduced at the end of this message). The vote is for (1) continuing the Forum, under (2) the moderatorship of Richard Poynder.

The AmSci list has now been migrated to http://mailman.ecs.soton.ac.uk/mailman/listinfo/goal where the BOAI list is also being hosted.

AmSci Forum members need not re-subscribe. All subscriptions have been automatically transferred to the new host site.

The name of the list has been changed to the Global Open Access List (GOAL) to reflect the fact that Open Access is no longer just an American or a Scientific matter. It has become a global movement.

The old AmSci Forum Archives (1998-2011) will stay up at the Sigma Xi site (indefinitely, I hope -- though we do have copies of the entire archive).

The new GOAL archive is at: http://mailman.ecs.soton.ac.uk/pipermail/goal/

Stevan Harnad

Below are the complete results of Straw Poll on whether to continue the Forum, and on who should be the new moderator:

AGAINST CONTINUING AMSCI:

ARIF JINHA: I believe it would be better to have one forum, the BOAI. This forum has developed a doctrinal bias defined by the values and personality of its leadership. Though the leadership is to be commended for its credibility and vigour, it is not without its blind spots. It has not always OPEN to a diversity of perspectives. AMSCI is driven by assertive and competitive advocacy for mandates over Gold OA publishing. The rush to conclusion on the right path is premature and overly authoritative in its expression, therefore it is alienating. In truth, we have only really got started with the web in the last 10 years and authority is completely flattened by the learning curve. The BOAI is much wider in its representation of Open Access alternatives, it is therefore more neutral as well as having a wider reach for the promotion of Green OA. It means less duplication and less work devoted to instant communication, giving more time to develop a rigorous and scientific approach to meta-scholarship in the digital age.

FOR CONTINUING AMSCI:

DANA ROTH: I would disagree with Arif Jinha, in that it is the 'assertive and competitive advocacy for mandates over Gold OA publishing' that make AMSCI such an interesting listserv.

SUBBIAH ARUNACHALAM: First, I wish to express my grateful thanks to Stevan for all that he has done so far, and in particular for moderating this Forum for so long and so well. That he will continue to devote much of his time to promoting open access and institutional repositories gives me strength to do the same. Second, if Richard Poynder agrees (or if we could persuade him) to moderate this list, there is nothing like it. The baton would have moved to safe hands. Not only he has the stamina of a long distance runner, but he is also endowed with the qualities needed for a moderator. He is knowledgeable and levelheaded. Welcome Richard!

DOMINIQUE BABINI: Discussions and ideas in this forum are also inspiring for regional OA forums and lists, e.g., the Latin America and the Caribbean Open Access List (LLAAR, in Spanish). Thank you, Stevan, for your dedication as moderator all these years, and especially for your new OA initiatives and ideas. Thank you for your Skype contribution at the OA Experts Meeting last week in UNESCO headquarters, where we missed you [in person]. I also support Richard Poynder as [new] moderator for this Forum.

MICHAEL E. SMITH: I am in favor of continuing the list, and either of the people you mentioned as potential moderators would be good choices.

PAOLA GARGIULO: I also agree that the list should continue. I'm in favour or Richard Poynder as moderator. Hope you will continue to contribute.

PETER SUBER: If Richard is willing to moderate, I vote for him. I second Alma's reasons why Richard would do well in this role. I second Arthur's best wishes to you, and I second (or third) Barbara and Hélène's tribute to your work. Finally, as the former moderator of SOAF and BOAI, I welcome you to civilian life. It's amazing what one can do when one has more time to do it.

BERNARD RENTIER: I vote for Richard Poynder. The excellence of his critical and fair papers speaks for his designation. If he is willing to do that, I am sure he will be an outstanding moderator. And that this will let Stevan be even more tirelessly to the point in every debate!

TOM COCHRANE: The value of the Forum cannot be overstated. It has provided a unique service in assessing the events and health of OA developments. It would be a regressive step in several ways if it were to fall over. It is not too much to claim that its way of charting developments, alerting readers to new issues, identifying useful research and work on OA, and in your hands, reminding its readership of the main issues – all these have had a direct impact on practical developments. This has occurred to a degree that no single one of us – from whatever part of the world - can comprehensively take in. But believe me, it has played a vital role. But individual workloads need to be shared, and we at QUT understand your reasoning. We are happy with the Richard Poynder suggestion.

ELOY RODRIGUES: I also support Richard Poynder for moderator. I strongly support the continuation of the AmSci Forum, and I regret your decision of stepping down as moderator (even though I understand your reasons, and I do hope that it will turn out the right decision for you, and your efforts for OA progress). Thanks for your tireless work for Open Access! All the best (from Rio de Janeiro, where I was also archivangilizing for ID/OA mandates, at the Portuguese-Brazilian OA conference).

KEITH JEFFERY: I am sorry it has come to this; you know I support your point of view and moderation does require correction of misconceptions as well as just posting. I wish the Amsci list to continue and Richard is, of course, an excellent choice as future moderator.

ANDREW A ADAMS: I am in favour of the forum continuing to operate. I feel Richard would make an excellent new moderator.

PIPPA SMART: I am in favour of the forum continuing and would be very happy for Richard Poynder to moderate.

MARC COUTURE: I definitely wish the forum to continue. I may be only the occasional contributor, but I've always been a very steady reader. As to you not being the moderator anymore, I think it's even a good thing, not because I share the opinion that a moderator should be neutral and discreet, but because it will spare you some precious time you could devote to useful purposes, OA-related or not. Note that I assume we will continue to benefit, in the forum, from the seemingly inexhaustible energy and the flawless, razor-sharp logic of our "weary" archivangelist.

BARRY MAHON: As a long time stirring stick in the OA (hard to know what word to use to describe it) world, and having crossed swords with both Stevan and Richard over the years, I have a heavy heart in accepting Stevan's decision but an uplift that Richard has volunteered. It will, I wish, go on....and I'll be there, or here, whichever is the more appropriate.

JEAN-CLAUDE GUÉDON: I also think this list should go on. And having Richard or Thomas moderate is a good idea too.

BOB PARKS: Congratulations on stepping down. I hope it gives you more time to pursue OA!!! Either Krichel or Poynder would be a good moderator. I fear that Krichel is over committed.

HEATHER MORRISON: Thanks very much for moderating the list all these years! I hope that the list will continue, and would support either Richard Poynder or Thomas Krichel as moderators.

SALLY MORRIS: The support for Richard as moderator of the continuing list seems clear. We really don't need to see all the messages - I thought that was the point of keeping them off the list?

THOMAS KRICHEL: I think it should continue, as it appears to be the largest and most active forum. I volunteer to do it…. If Richard wants to do it, I'd be happy not to.

RICHARD POYNDER: Well I certainly vote for it to continue. I would even put my name down for the moderator's hat if it was felt appropriate for a journalist to run such a forum, and people believed I could do the job adequately

ALMA SWAN: I am writing to nominate Richard Poynder as the new moderator for the AmSci Forum. I think he brings the right qualities - amongst them honesty, fairness, intellectual curiousness and efficiency - and is hugely respected as an independent, critical thinker on the issues that AmSci covers. I want the Forum to continue because it is a real discussion list rather than a bulletin board…

HELENE BOSC: In memory of the remarkable work done by Stevan Harnad for Open Access through this list, during 14 years, I wish it continues... Richard Poynder would be a perfect moderator!

BARBARA KIRSOP: If Stevan feels he can better operate in support of OA not as the moderator, then it would be great indeed if Richard Poynder would adopt the mantle. I think AMSCI should continue. I am somewhat in favour of a name change to highlight OA rather than the US - a name change could be a mini-re-launch perhaps and bring in new contributors - a fitting tribute to Stevan's past efforts.

ARTHUR SALE: May I wish you the best as non-moderator. It is the right decision for you, I think. This may be a shock to you that I think that it is a plus, but I think we need to get new ideas into the OA transition, and you have done your bit and a lot more… and perhaps I can even convince you eventually that the Titanium Road is the way to go now! You will be bombarded with messages begging you to reconsider, but I do think it is the right decision. Then you can enjoy being yourself without constraint. No one person can bear the weight of the world, not even Atlas.

IRYNA KUCHMA: The AmSci Open Access Forum is an active discussion forum (SOAF and BOAI are more like the announcement lists) and my answer is (1) definitely to continue. It's sad that you've decided to step down as a moderator. I wish I could help you with moderating it, but I am travelling a lot and sometimes not able to moderate the BOAI on time…. Hope you will find the ways to continue.

EUGENE GARFIELD: When I think about Stevan Harnad another information pioneer comes to mind. The Belgian documentalist Paul Otlet. His collaborator Henri LaFontaine, received the Nobel Peace Prize. That's the kind of recognition that Stevan deserves.

MICHAEL KURTZ: I liked that AMSCI reflected your point of view, I value that,

and expect I will always. I hope I will always be able to discover

it, perhaps you will frequently post.

THIERRY CHANIER : First of all, I would like to deeply thank Stevan for his continuous work over these more than 10 years. This forum is a very important place where supporters of OA can find information, and share their actions. I would be happy if Richard Poynder becomes our moderator. As Barbara (Kirsop) put it : it may be time to change the name of our forum, forget the American qualifier in order to reflect its international wider status.

TONY HEY: I think that Stevan must take some credit from the UK Government's decision to insist on open access to publications and data ... Well done Stevan and thanks for all your tireless proselytizing on behalf of open access!

Wednesday, February 23. 2011

Critique of McCabe & Snyder: Online not= OA, and OA not= OA journal

Comments on:

Comments on: The following quotes are from McCabe, MJ (2011) Online access versus open access. Inside Higher Ed. February 10, 2011.McCabe, MJ & Snyder, CM (2011) Did Online Access to Journals Change the Economics Literature?

Abstract: Does online access boost citations? The answer has implications for issues ranging from the value of a citation to the sustainability of open-access journals. Using panel data on citations to economics and business journals, we show that the enormous effects found in previous studies were an artifact of their failure to control for article quality, disappearing once we add fixed effects as controls. The absence of an aggregate effect masks heterogeneity across platforms: JSTOR boosts citations around 10%; ScienceDirect has no effect. We examine other sources of heterogeneity including whether JSTOR benefits "long-tail" or "superstar" articles more.

MCCABE: …I thought it would be appropriate to address the issue that is generating some heat here, namely whether our results can be extrapolated to the OA environment….If "selection bias" refers to authors' bias toward selectively making their better (hence more citeable) articles OA, then this was controlled for in the comparison of self-selected vs. mandated OA, by Gargouri et al (2010) (uncited in the McCabe & Snyder (2011) [M & S] preprint, but known to the authors -- indeed the first author requested, and received, the entire dataset for further analysis: we are all eager to hear the results).

1. Selection bias and other empirical modeling errors are likely to have generated overinflated estimates of the benefits of online access (whether free or paid) on journal article citations in most if not all of the recent literature.

If "selection bias" refers to the selection of the journals for analysis, I cannot speak for studies that compare OA journals with non-OA journals, since we only compare OA articles with non-OA articles within the same journal. And it is only a few studies, like Evans and Reimer's, that compare citation rates for journals before and after they are made accessible online (or, in some cases, freely accessible online). Our principal interest is in the effects of immediate OA rather than delayed or embargoed OA (although the latter may be of interest to the publishing community).

MCCABE: 2. There are at least 2 “flavors” found in this literature: 1. papers that use cross-section type data or a single observation for each article (see for example, Lawrence (2001), Harnad and Brody (2004), Gargouri, et. al. (2010)) and 2. papers that use panel data or multiple observations over time for each article (e.g. Evans (2008), Evans and Reimer (2009)).We cannot detect any mention or analysis of the Gargouri et al. paper in the M & S paper…

MCCABE: 3. In our paper we reproduce the results for both of these approaches and then, using panel data and a robust econometric specification (that accounts for selection bias, important secular trends in the data, etc.), we show that these results vanish.We do not see our results cited or reproduced. Does "reproduced" mean "simulated according to an econometric model"? If so, that is regrettably too far from actual empirical findings to be anything but speculations about what would be found if one were actually to do the empirical studies.

MCCABE: 4. Yes, we “only” test online versus print, and not OA versus online for example, but the empirical flaws in the online versus print and the OA versus online literatures are fundamentally the same: the failure to properly account for selection bias. So, using the same technique in both cases should produce similar results.Unfortunately this is not very convincing. Flaws there may well be in the methodology of studies comparing citation counts before and after the year in which a journal goes online. But these are not the flaws of studies comparing citation counts of articles that are and are not made OA within the same journal and year.

Nor is the vague attribution of "failure to properly account for selection bias" very convincing, particularly when the most recent study controlling for selection bias (by comparing self-selected OA with mandated OA) has not even been taken into consideration.

Conceptually, the reason the question of whether online access increases citations over offline access is entirely different from the question of whether OA increases citations over non-OA is that (as the authors note), the online/offline effect concerns ease of access: Institutional users have either offline access or online access, and, according to M & S's results, in economics, the increased ease of accessing articles online does not increase citations.

This could be true (although the growth across those same years of the tendency in economics to make prepublication preprints OA [harvested by RepEc] through author self-archiving, much as the physicists had started doing a decade earlier in Arxiv, and computer scientists started doing even earlier [later harvested by Citeseerx] could be producing a huge background effect not taken into account at all in M & S's painstaking temporal analysis (which itself appears as an OA preprint in SSRN!).

But any way one looks at it, there is an enormous difference between comparing easy vs. hard access (online vs. offline) and comparing access with no access. For when we compare OA vs non-OA, we are taking into account all those potential users that are at institutions that cannot afford subscriptions (whether offline or online) to the journal in which an article appears. The barrier, in other words (though one should hardly have to point this out to economists), is not an ease barrier but a price barrier: For users at nonsubscribing institutions, non-OA articles are not just harder to access: They are impossible to access -- unless a price is paid.

(I certainly hope that M & S will not reply with "let them use interlibrary loan (ILL)"! A study analogous to M & S's online/offline study comparing citations for offline vs. online vs. ILL access in the click-through age would not only strain belief if it too found no difference, but it too would fail to address OA, since OA is about access when one has reached the limits of one's institution's subscription/license/pay-per-view budget. Hence it would again miss all the usage and citations that an article would have gained if it had been accessible to all its potential users and not just to those whose institutions could afford access, by whatever means.)

It is ironic that M & S draw their conclusions about OA in economic terms (and, predictably, as their interest is in modelling publication economics) in terms of the cost/benefits, for an author, of paying to publish in an OA journal. concluding that since they have shown it will not generate more citations, it is not worth the money.

But the most compelling findings on the OA citation advantage come from OA author self-archiving (of articles published in non-OA journals), not from OA journal publishing. Those are the studies that show the OA citation advantage, and the advantage does not cost the author a penny! (The benefits, moreover, accrue not only to authors and users, but to their institutions too, as the economic analysis of Houghton et al shows.)

And the extra citations resulting from OA are almost certainly coming from users for whom access to the article would otherwise have been financially prohibitive. (Perhaps it's time for more econometric modeling from the user's point of view too…)

I recommend that M & S look at the studies of Michael Kurtz in astrophysics. Those, too, included sophisticated long-term studies of the effect of the wholesale switch from offline to online, and Kurtz found that total citations were in fact slightly reduced, overall, when journals became accessible online! But astrophysics, too, is a field in which OA self-archiving is widespread. Hence whether and when journals go online is moot, insofar as citations are concerned.

(The likely hypothesis for the reduced citations -- compatible also with our own findings in Gargouri et al -- is that OA levels the playing field for users: OA articles are accessible to all their potential usesr, not just to those whose institutions can afford toll access. As a result, users can self-selectively decide to cite only the best and most relevant articles of all, rather than having to make do with a selection among only the articles to which their institutions can afford toll access. One corollary of this [though probably also a spinoff of the Seglen/Pareto effect] is that the biggest beneficiaries of the OA citation advantage will be the best articles. This is a user-end -- rather than an author-end -- selection effect...)

MCCABE: 5. At least in the case of economics and business titles, it is not even possible to properly test for an independent OA effect by specifically looking at OA journals in these fields since there are almost no titles that switched from print/online to OA (I can think of only one such title in our sample that actually permitted backfiles to be placed in an OA repository). Indeed, almost all of the OA titles in econ/business have always been OA and so no statistically meaningful before and after comparisons can be performed.The multiple conflation here is so flagrant that it is almost laughable. Online ≠ OA and OA ≠ OA journal.

First, the method of comparing the effect on citations before vs. after the offline/online switch will have to make do with its limitations. (We don't think it's of much use for studying OA effects at all.) The method of comparing the effect on citations of OA vs. non-OA within the same (economics/business, toll-access) journals can certainly proceed apace in those disciplines, the studies have been done, and the results are much the same as in other disciplines.

M & S have our latest dataset: Perhaps they would care to test whether the economics/business subset of it is an exception to our finding that (a) there is a significant OA advantage in all disciplines, and (b) it's just as big when the OA is mandated as when it is self-selected.

MCCABE: 6. One alternative, in the case of cross-section type data, is to construct field experiments in which articles are randomly assigned OA status (e.g. Davis (2008) employs this approach and reports no OA benefit).And another one -- based on an incomparably larger N, across far more fields -- is the Gargouri et al study that M & S fail to mention in their article, in which articles are mandatorily assigned OA status, and for which they have the full dataset in hand, as requested.

MCCABE: 7. Another option is to examine articles before and after they were placed in OA repositories, so that the likely selection bias effects, important secular trends, etc. can be accounted for (or in economics jargon, “differenced out”). Evans and Reimer’s attempt to do this in their 2009 paper but only meet part of the econometric challenge.M & S are rather too wedded to their before/after method and thinking! The sensible time for authors to self-archive their papers is immediately upon acceptance for publication. That's before the published version has even appeared. Otherwise one is not studying OA but OA embargo effects. (But let me agree on one point: Unlike journal publication dates, OA self-archiving dates are not always known or taken into account; so there may be some drift there, depending on when the author self-archives. The solution is not to study the before/after watershed, but to focus on the articles that are self-archived immediately rather than later.)

Stevan Harnad

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 5 (10). e13636

Harnad, S. (2010) The Immediate Practical Implication of the Houghton Report: Provide Green Open Access Now. Prometheus 28 (1): 55-59.

Wednesday, October 20. 2010

Correlation, Causation, and the Weight of Evidence

Jennifer Howard ("Is there an Open-Access Advantage?," Chronicle of Higher Education, October 19 2010) seems to have missed the point of our article. It is undisputed that study after study has found that Open Access (OA) is correlated with higher probability of citation. The question our study addressed was whether making an article OA causes the higher probability of citation, or the higher probability causes the article to be made OA.SUMMARY: One can only speculate on the reasons why some might still wish to cling to the self-selection bias hypothesis in the face of all the evidence to date. It seems almost a matter of common sense that making articles more accessible to users also makes them more usable and citable -- especially in a world where most researchers are familiar with the frustration of arriving at a link to an article that they would like to read (but their institution does not subscribe), so they are asked to drop it into the shopping cart and pay $30 at the check-out counter. The straightforward causal relationship is the default hypothesis, based on both plausibility and the cumulative weight of the evidence. Hence the burden of providing counter-evidence to refute it is now on the advocates of the alternative.

The latter is the "author self-selection bias" hypothesis, according to which the only reason OA articles are cited more is that authors do not make all articles OA: only the better ones, the ones that are also more likely to be cited.

But almost no one finds that OA articles are cited more a year after publication. The OA citation advantage only becomes statistically detectable after citations have accumulated for 2-3 years.

Even more important, Davis et al. did not test the obvious and essential control condition in their randomized OA experiment: They did not test whether there was a statistically detectable OA advantage for self-selected OA in the same journals and time-window. You cannot show that an effect is an artifact of self-selection unless you show that with self-selection the effect is there, whereas with randomization it is not. All Davis et al showed was that there is no detectable OA advantage at all in their one-year sample (247 articles from 11 Biology journals); randomness and self-selection have nothing to do with it.

Davis et al released their results prematurely. We are waiting*,** to hear what Davis finds after 2-3 years, when he completes his doctoral dissertation. But if all he reports is that he has found no OA advantage at all in that sample of 11 biology journals, and that interval, rather than an OA advantage for the self-selected subset and no OA advantage for the randomized subset, then again, all we will have is a failure to replicate the positive effect that has now been reported by many other investigators, in field after field, often with far larger samples than Davis et al's.

Meanwhile, our study was similar to that of Davis et al's, except that it was a much bigger sample, across many fields, and a much larger time window -- and, most important, we did have a self-selective matched-control subset, which did show the usual OA advantage. Instead of comparing self-selective OA with randomized OA, however, we compared it with mandated OA -- which amounts to much the same thing, because the point of the self-selection hypothesis is that the author picks and chooses what to make OA, whereas if the OA is mandatory (required), the author is not picking and choosing, just as the author is not picking and choosing when the OA is imposed randomly.Davis's results are welcome and interesting, and include some good theoretical insights, but insofar as the OA Citation Advantage is concerned, the empirical findings turn out to be just a failure to replicate the OA Citation Advantage in that particular sample and time-span -- exactly as predicted above. The original 2008 sample of 247 OA and 1372 non-OA articles in 11 journals one year after publication has now been extended to 712 OA and 2533 non-OA articles in 36 journals two years after publication. The result is a significant download advantage for OA articles but no significant citation advantage.

*Note added October 31, 2010: Davis's dissertation turns out to have been posted on the same day as the present posting (October 20; thanks to Les Carr for drawing this to my attention on October 24!).

**Note added November 24, 2010: Phil Davis's results -- a replication of the OA download advantage and a non-replication of the OA citation advantage -- have since been published as: Davis, P. (2010) Does Open Access Lead to Increased Readership and Citations? A Randomized Controlled Trial of Articles Published in APS Journals. The Physiologist 53(6) December 2010.

The only way to describe this outcome is as a non-replication of the OA Citation Advantage on this particular sample; it is most definitely not a demonstration that the OA Advantage is an artifact of self-selection, since there is no control group demonstrating the presence of the citation advantage with self-selected OA and the absence of the citation advantage with randomized OA across the same sample and time-span: There is simply the failure to detect any citation advantage at all.

This failure to replicate is almost certainly due to the small sample size as well as the short time-span. (Davis's a-priori estimates of the sample size required to detect a 20% difference took no account of the fact that citations grow with time; and the a-priori criterion fails even to be met for the self-selected subsample of 65.)

"I could not detect the effect in a much smaller and briefer sample than others" is hardly news! Compare the sample size of Davis's negative results with the sample-sizes and time-spans of some of the studies that found positive results:

And our finding is that the mandated OA advantage is just as big as the self-selective OA advantage.

As we discussed in our article, if someone really clings to the self-selection hypothesis, there are some remaining points of uncertainty in our study that self-selectionists can still hope will eventually bear them out: Compliance with the mandates was not 100%, but 60-70%. So the self-selection hypothesis has a chance of being resurrected if one argues that now it is no longer a case of positive selection for the stronger articles, but a refusal to comply with the mandate for the weaker ones. One would have expected, however, that if this were true, the OA advantage would at least be weaker for mandated OA than for unmandated OA, since the percentage of total output that is self-archived under a mandate is almost three times the 5-25% that is self-archived self-selectively. Yet the OA advantage is undiminished with 60-70% mandate compliance in 2002-2006. We have since extended the window by three more years, to 2009; the compliance rate rises by another 10%, but the mandated OA advantage remains undiminished. Self-selectionists don't have to cede till the percentage is 100%, but their hypothesis gets more and more far-fetched...

The other way of saving the self-selection hypothesis despite our findings is to argue that there was a "self-selection" bias in terms of which institutions do and do not mandate OA: Maybe it's the better ones that self-select to do so. There may be a plausible case to be made that one of our four mandated institutions -- CERN -- is an elite institution. (It is also physics-only.) But, as we reported, we re-did our analysis removing CERN, and we got the same outcome. Even if the objection of eliteness is extended to Southampton ECS, removing that second institution did not change the outcome either. We leave it to the reader to decide whether it is plausible to count our remaining two mandating institutions -- University of Minho in Portugal and Queensland University of Technology in Australia -- as elite institutions, compared to other universities. It is a historical fact, however, that these four institutions were the first in the world to elect to mandate OA.

One can only speculate on the reasons why some might still wish to cling to the self-selection bias hypothesis in the face of all the evidence to date. It seems almost a matter of common sense that making articles more accessible to users also makes them more usable and citable -- especially in a world where most researchers are familiar with the frustration of arriving at a link to an article that they would like to read (but their institution does not subscribe), so they are asked to drop it into the shopping cart and pay $30 at the check-out counter. The straightforward causal relationship is the default hypothesis, based on both plausibility and the cumulative weight of the evidence. Hence the burden of providing counter-evidence to refute it is now on the advocates of the alternative.

Davis, PN, Lewenstein, BV, Simon, DH, Booth, JG, & Connolly, MJL (2008) Open access publishing, article downloads, and citations: randomised controlled trial , British Medical Journal 337: a568

Gargouri, Y., Hajjem, C., Lariviere, V., Gingras, Y., Brody, T., Carr, L. and Harnad, S. (2010) Self-Selected or Mandated, Open Access Increases Citation Impact for Higher Quality Research. PLOS ONE 10(5) e13636

Harnad, S. (2008) Davis et al's 1-year Study of Self-Selection Bias: No Self-Archiving Control, No OA Effect, No Conclusion. Open Access Archivangelism July 31 2008

Wednesday, November 19. 2008

Open Access Allows All the Cream to Rise to the Top

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

Tenopir & King's confirmation of the finding (by Kurtz and others) -- that as more articles become accessible, more articles are indeed accessed (and read), but fewer articles are cited (and those are cited more) -- is best explained by the increased selectivity made possible by that increased accessibility:

Tenopir & King's confirmation of the finding (by Kurtz and others) -- that as more articles become accessible, more articles are indeed accessed (and read), but fewer articles are cited (and those are cited more) -- is best explained by the increased selectivity made possible by that increased accessibility:The Seglen "skewness" effect is that the top 20% of articles receive 80% of all citations. It is probably safe to say that although there are no doubt some bandwagon and copycat effects contributing to the Seglen effect, overall the 20/80 rule probably reflects the fact that the best work gets cited most (skewing citations toward the top of the quality distribution).

So when more researchers have access to more (or, conversely, are denied access to less), they are more likely to access the best work, and the best work thereby increases its likelihood of being cited, whereas the rest correspondingly decreases its likelihood of being cited. Another way to put it is that there is a levelling of the playing field: Any advantage that the lower 80% had enjoyed from mere accessibility in the toll-access lottery is eliminated, and with it any handicap the top 20% suffered from inaccessibility in the toll-access lottery is eliminated too. Open Access (OA) allows all the cream to rise to the top; accessibility is no longer a constraint on what to cite, one way or the other.

(I would like to point out also that this "quality selectivity" on the part of users -- rather than self-selection on the part of authors -- is likely to be the main contributor to the citation advantage of Open Access articles over Toll Access articles. It follows from the 20/80 rule that whatever quality-selectivity there is on the part of users will be enjoyed mostly by the top 20% of articles. There is no doubt at all that the top authors are more likely to make their articles OA, and that the top articles are more likely to be made OA, but one should ask oneself why that should be the case, if there were no benefits [or the only benefit were more readers, but fewer citations!]: One of the reasons the top articles are more likely to be made OA is precisely that they are also the most likely to be used, applied and cited more if they are made OA!)

Stevan Harnad

American Scientist Open Access Forum

Monday, August 25. 2008

Confirmation Bias and the Open Access Advantage: Some Methodological Suggestions for Davis's Citation Study

Update Jan 1, 2010: See Gargouri, Y; C Hajjem, V Larivière, Y Gingras, L Carr,T Brody & S Harnad (2010) “Open Access, Whether Self-Selected or Mandated, Increases Citation Impact, Especially for Higher Quality Research”

Update Feb 8, 2010: See also "Open Access: Self-Selected, Mandated & Random; Answers & Questions"

SUMMARY: Davis (2008) analyzes citations from 2004-2007 in 11 biomedical journals. For 1,600 of the 11,000 articles (15%), their authors paid the publisher to make them Open Access (OA). The outcome, confirming previous studies (on both paid and unpaid OA), is a significant OA citation Advantage, but a small one (21%, 4% of it correlated with other article variables such as number of authors, references and pages). The author infers that the size of the OA advantage in this biomedical sample has been shrinking annually from 2004-2007, but the data suggest the opposite. In order to draw valid conclusions from these data, the following five further analyses are necessary:

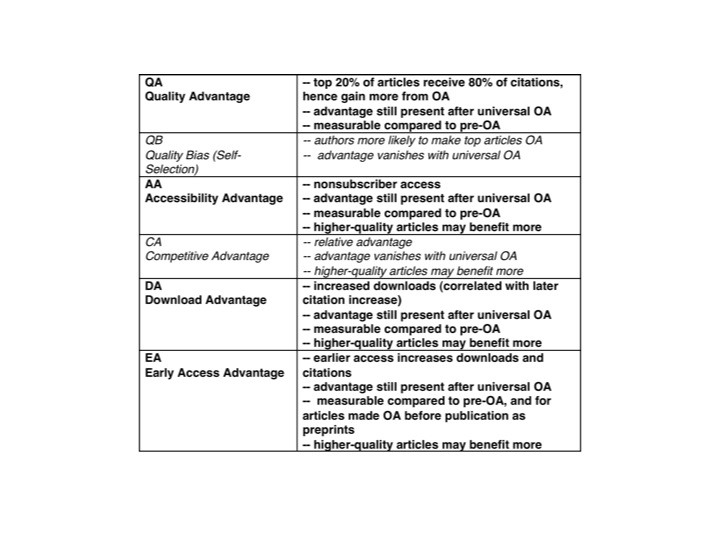

(1) The current analysis is based only on author-choice (paid) OA. Free OA self-archiving needs to be taken into account too, for the same journals and years, rather than being counted as non-OA, as in the current analysis.Davis proposes that an author self-selection bias for providing OA to higher-quality articles (the Quality Bias, QB) is the primary cause of the observed OA Advantage, but this study does not test or show anything at all about the causal role of QB (or of any of the other potential causal factors, such as Accessibility Advantage, AA, Competitive Advantage, CA, Download Advantage, DA, Early Advantage, EA, and Quality Advantage, QA). The author also suggests that paid OA is not worth the cost, per extra citation. This is probably true, but with OA self-archiving, both the OA and the extra citations are free.

(2) The proportion of OA articles per journal per year needs to be reported and taken into account.

(3) Estimates of journal and article quality and citability in the form of the Journal Impact Factor and the relation between the size of the OA Advantage and journal as well as article “citation-bracket” need to be taken into account.

(4) The sample-size for the highest-impact, largest-sample journal analyzed, PNAS, is restricted and is excluded from some of the analyses. An analysis of the full PNAS dataset is needed, for the entire 2004-2007 period.

(5) The analysis of the interaction between OA and time, 2004-2007, is based on retrospective data from a June 2008 total cumulative citation count. The analysis needs to be redone taking into account the dates of both the cited articles and the citing articles, otherwise article-age effects and any other real-time effects from 2004-2008 are confounded.

The Davis (2008) preprint is an analysis of the citations from years c. 2004-2007 in 11 biomedical journals: c. 11,000 articles, of which c. 1,600 (15%) were made Open Access (OA) through “Author Choice” (AC-OA): author chooses to pay publisher for OA). Author self-archiving (SA-OA) articles from the same journals was not measured.Comments on: Davis, P.M. (2008) Author-choice open access publishing in the biological and medical literature: a citation analysis. Journal of the American Society for Information Science and Technology (JASIST) (in press) http://arxiv.org/pdf/0808.2428v1

The result was a significant OA citation advantage (21%) over time, of which 4% was correlated with variables other than OA and time (number of authors, pages, references; whether article is a Review and has a US co-author).

This outcome confirms the findings of numerous previous studies (some of them based on far larger samples of fields, journals, articles and time-intervals) of an OA citation advantage (ranging from 25%-250%) in all fields, across a 10-year range (Hitchcock 2008).

The preprint also states that the size of the OA advantage in this biomedical sample diminishes annually from 2004-2007. But the data seem to show the opposite: that as an article gets older, and its cumulative citations grow, its absolute and relative OA advantage grow too.

The preprint concludes, based on its estimate of the size of the OA citation Advantage, that AC-OA is not worth the cost, per extra citation. This is probably true -- but with SA-OA the OA and the extra citations can be had at no cost at all.

The paper is accepted for publication in JASIST. It is not clear whether the linked text is the unrefereed preprint, or the refereed, revised postprint. On the assumption that it is the unrefereed preprint, what follows is an extended peer commentary with recommendations on what should be done in revising it for publication.

(It is very possible, however, that some or all of these revisions were also recommended by the JASIST referees and that some of the changes have already been made in the published version.)

As it stands currently, this study (i) confirms a significant OA citation Advantage, (ii) shows that it grows cumulatively with article age and (iii) shows that it is correlated with several other variables that are correlated with citation counts.

Although the author argues that an author self-selection bias for preferentially providing OA to higher-quality articles (the Quality Bias, QB) is the primary causal factor underlying the observed OA Advantage, in fact this study does not test or show anything at all about the causal role of QB (or of any of the other potential causal factors underlying the OA Advantage, such as Accessibility Advantage, AA, Competitive Advantage, CA, Download Advantage, DA, Early Advantage, EA, and Quality Advantage, QA; Hajjem & Harnad 2007b).

The following 5 further analyses of the data are necessary. The size and pattern of the observed results, as well as their interpretations, could all be significantly altered (as well as deepened) by their outcome:

(1) The current analysis is based only on author-choice (paid) OA. Free author self-archiving OA needs to be taken into account too, for the same journals and years, rather than being counted as non-OA, as in the current analysis.Commentary on the text of the preprint:

(2) The proportion of OA articles per journal per year needs to be reported and taken into account.

(3) Estimates of journal and article quality and citability in the form of the Journal Impact Factor (journal’s average citations) and the relation between the size of the OA Advantage and journal and article “citation-bracket” need to be taken into account.

(4) The sample-size for the highest-impact, largest-sample journal, PNAS, is restricted and is excluded from some of the analyses. A full analysis of the full PNAS dataset is needed, for the entire 2004-2007 period.

(5) The analysis of the interaction between OA and time, 2004-2007, is based on retrospective data from a June 2008 total cumulative citation count. The analysis needs to be redone taking into account the dates of both the cited articles and the citing articles, otherwise article-age effects and any other real-time effects from 2004-2008 are confounded.

“ABSTRACT… there is strong evidence to suggest that the open access advantage is declining by about 7% per year, from 32% in 2004 to 11% in 2007”It is not clearly explained how these figures and their interpretation are derived, nor is it reported how many OA articles there were in each of these years. The figures appear to be based on a statistical interaction between OA and article-age in a multiple regression analysis for 9 of the 11 journals in the sample. (a) The data from PNAS, the largest and highest-impact journal, are excluded from this analysis. (b) The many variables included in the (full) multiple regression equation (across journals) omit one of the most obvious ones: journal impact factor. (c) OA articles that are self-archived rather than paid author-choice are not identified and included as OA, hence their citations are counted as being non-OA. (d) The OA/age interaction is not based on yearly citations after a fixed interval for each year, but on cumulative retrospective citations in June 2008.

The natural interpretation of Figure 1 accordingly seems to be the exact opposite of the one the author makes: Not that the size of the OA Advantage shrinks from 2004-2007, but that the size of the OA Advantage grows from 2007-2004 (as articles get older and their citations grow). Not only do cumulative citations grow for both OA and non-OA articles from year 2007 articles to year 2004 articles, but the cumulative OA advantage increases (by about 7% per year, even on the basis of this study’s rather slim and selective data and analyses).

This is quite natural, as not only do citations grow with time, but the OA Advantage -- barely detectable in the first year, being then based on the smallest sample and the fewest citations -- emerges with time.

“See Craig et al. [2007] for a critical review of the literature [on the OA citation advantage]”Craig et al’s rather slanted 2007 review is the only reference to previous findings on the OA Advantage cited by the Davis preprint (Harnad 2007a). Craig et al. had attempted to reinterpret the many times replicated positive finding of an OA citation advantage, on the basis of 4 negative findings (Davis & Fromerth, 2007; Kurtz et al., 2005; Kurtz & Henneken, 2007; Moed, 2007), in maths, astronomy and condensed matter physics, respectively. Apart from Davis’s own prior study, these studies were based mainly on preprints that were made OA well before publication. The observed OA advantage consisted mostly of an Early Access Advantage for the OA prepublication preprint, plus an inferred Quality Bias (QB) on the part of authors towards preferentially providing OA to higher quality preprints (Harnad 2007b).

The Davis preprint does not cite any of the considerably larger number of studies that have reported large and consistent OA advantages for postprints, based on many more fields, some of them based on far larger samples and longer time intervals (Hitchcock 2008). Instead, Davis focuses rather single-mindedly on the hypothesis that most or all of the OA Advantage is the result for the self-selection bias (QB) toward preferentially making higher-quality (hence more citeable) articles OA:

“authors selectively choose which articles to promote freely… [and] highly cited authors disproportionately choose open access venues”It is undoubtedly true that better authors are more likely to make their articles OA, and that authors in general are more likely to make their better articles OA. This Quality or “Self-Selection” Bias (QB) is one of the probable causes of the OA Advantage.

However, no study has shown that QB is the only cause of the OA Advantage, nor even that it is the biggest cause. Three of the studies cited (Kurtz et al., 2005; Kurtz & Henneken, 2007; Moed, 2007) showed that another causal factor is Early Access (EA: providing OA earlier results in more citations).

There are several other candidate causal factors in the OA Advantage, besides QB and EA (Hajjem & Harnad 2007b):

There is the Download (or Usage) Advantage (DA): OA articles are downloaded significantly more, and this early DA has also been shown to be predictive of a later citation advantage in Physics (Brody et al. 2006).

There is the Download (or Usage) Advantage (DA): OA articles are downloaded significantly more, and this early DA has also been shown to be predictive of a later citation advantage in Physics (Brody et al. 2006).There is a Competitive Advantage (CA): OA articles are in competition with non-OA articles, and to the extent that OA articles are relatively more accessible than non-OA articles, they can be used and cited more. Both QB and CA, however, are temporary components of the OA advantage that will necessarily shrink to zero and disappear once all research is OA. EA and DA, in contrast, will continue to contribute to the OA advantage even after universal OA is reached, when all postprints are being made OA immediately upon publication, compared to pre-OA days (as Kurtz has shown for Astronomy, which has already reached universal post-publication OA).

There is an Accessibility Advantage (AA) for those users whose institutions do not have subscription access to the journal in which the article appeared. AA too (unlike CA) persists even after universal OA is reached: all articles then have AA's full benefit.

And there is at least one more important causal component in the OA Advantage, apart from AA, CA, DA and QB, and that is a Quality Advantage (QA), which has often been erroneously conflated with QB (Quality Bias):

Ever since Lawrence’s original study in 2001, the OA Advantage can be estmated in two different ways: (1) by comparing the average citations for OA and non-OA articles (log citation ratios within the same journal and year, or regression analyses like Davis’s) and (2) by comparing the proportion of OA articles in different “citation brackets” (0, 1, 2, 3-4, 5-8, 9-16, 17+ citations).

In method (2), the OA Advantage is observed in the form of an increase in the proportion of OA articles in the higher citation brackets. But this correlation can be explained in two ways. One is QB, which is that authors are more likely to make higher-quality articles OA. But it is also at least as plausible that higher-quality articles benefit more from OA! It is already known that the top c. 10-20% of articles receive c. 80-90% of all citations (Seglen’s 1992 “skewness of science”). It stands to reason, then, that when all articles are made OA, it is the top 20% of articles that are most likely to be cited more: Not all OA articles benefit from OA equally, because not all articles are of equally citable quality.

Hence both QB and QA are likely to be causal components in the OA Advantage, and the only way to tease them apart and estimate their individual contributions is to control for the QB effect by imposing the OA instead of allowing it to be determined by self-selection. We (Gargouri, Hajjem, Gingras, Carr & Harnad, in prep.) are completing such a study now, comparing mandated and unmandated OA; and Davis et al 2008 have just published another study on randomized OA for 11 journals:

“In the first controlled trial of open access publishing where articles were randomly assigned to either open access or subscription-access status, we recently reported that no citation advantage could be attributed to access status (Davis, Lewenstein, Simon, Booth, & Connolly, 2008)”This randomized OA study by Davis et al. was very welcome and timely, but it had originally been announced to cover a 4-year period, from 2007-2010, whereas it was instead prematurely published in 2008, after only one year. No OA advantage at all was observed in that 1-year interval, and this too agrees with the many existing studies on the OA Advantage, some based on far larger samples of journals, articles and fields: Most of those studies (none of them randomized) likewise detected no OA citation advantage at all in the first year: It is simply too early. In most fields, citations take longer than a year to be made, published, ISI-indexed and measured, and to make any further differentials (such as the OA Advantage) measurable. (This is evident in Davis’s present preprint too, where the OA advantage is barely visible in the first year (2007).)

The only way the absence of a significant OA advantage in a sample with randomized OA can be used to demonstrate that the OA Advantage is only or mostly just a self-selection bias (QB) is by also demonstrating the presence of a significant OA advantage in the same (or comparable) sample with nonrandomized (i.e., self-selected) OA.

But Davis et al. did not do this control comparison (Harnad 2008b). Finding no OA Advantage with randomized OA after one year merely confirms the (widely observed) finding that one year is usually too early to detect any OA Advantage; but it shows nothing whatsoever about self-selection QB.

“we examine the citation performance of author-choice open access”It is quite useful and interesting to examine citations for OA and non-OA articles where the OA is provided through (self-selected) “Author-Choice” (i.e., authors paying the publisher to make the article OA on the publisher’s website).

Most prior studies of the OA citation Advantage, however, are based on free self-archiving by authors on their personal, institutional or central websites. In the bigger studies, a robot trawls the web using ISI bibliographic metadata to find which articles are freely available on the web (Hajjem et al. 2005).

Hence a natural (indeed essential) control test that has been omitted from Davis’s current author-choice study – a test very much like the control test omitted from the Davis et al randomized OA study – is to identify the articles in the same sample that were made OA through author self-archiving. If those articles are identified and counted, that not only provides an estimate of the relative uptake of author-choice OA vs OA self-archiving in the same sample interval, but it allows a comparison of their respective OA Advantages. More important, it corrects the estimate of an OA Advantage based on author-choice OA alone: For, as Davis has currently done the analysis, any OA Advantage from OA self-archiving in this sample would in fact reduce the estimate of the OA Advantage based on author-choice OA (mistakenly counting as non-OA the articles and citation-counts for self-archived OA articles)

“METHODS… The uptake of the open access author-choice programs for these [11] journals ranged from 5% to 22% over the dates analyzed”Davis’s preprint does not seem to provide the data – either for individual journals or for the combined totals – on the percentage of author-choice OA (henceforth AC-OA) by year, nor on the relation between the proportion uptake of AC-OA and the size of the OA Advantage, by year.

As Davis has been careful to do multiple regression analyses on many of the article-variables that might correlate with citations and OA (article age, number of authors, number of references, etc.), it seems odd not to take into account the relation between the size of the AC-OA Advantage and the degree of uptake of AC-OA, by year. The other missing information is the corresponding data for self-archiving OA (henceforth SA-OA).

“[For] All of the journals… all articles roll into free access after an initial period [restricted to subscription access only for 12 months (8 journals), 6 months (2 journals) or 24 months (1 journal)]”(This is important in relation to the Early Access (EA) Advantage, which is the biggest contributor to the OA Advantage in the two cited studies by Kurtz on Astronomy. Astronomy has free access to the postprints of all articles in all astronomy journals immediately upon publication. Hence Astronomy has scope for an OA Advantage only through an EA Advantage, arising from the early posting of preprints before publication. The size of the OA Advantage in other fields -- in which (unlike in Astronomy) access to the postprint is restricted to subscribers-only for 6, 12, or 24 months -- would then be the equivalent of an estimate of an “EA Advantage” for those potential users who lack subscription access – i.e., the Accessibility Advantage, AA.)

“Cumulative article citations were retrieved on June 1, 2008. The age of the articles ranged from 18 to 57 months”Most of the 11 journals were sampled till December 2007. That would mean that the 2007 OA Advantage was based on even less than one year from publication.

“STATISTICAL ANALYSIS… Because citation distributions are known to be heavily skewed (Seglen, 1992) and because some of the articles were not yet cited in our dataset, we followed the common practice of adding one citation to every article and then taking the natural log”(How well did that correct the skewness? If it still was not normal, then citations might have to be dichotomized as a 0/1 variable, comparing, by citation-bracket slices, (1) 0 citations vs 1 or more citations, (2) 0 or 1 vs more than 1, (3) 2 or fewer vs. more than 2, (4) 3 or fewer vs. more than 3… etc.)

“For each journal, we ran a reduced [2 predictor] model [article age and OA] and a full [7 predictor] regression model [age, OA; log no. of authors, references, pages; Review; US author]”Both analyses are, of course, a good idea to do, but why was Journal Impact Factor (JIF) not tested as one of the predictor variables in the cross-journal analyses (Hajjem & Harnad 2007a)? Surely JIF, too, correlates with citations: Indeed, the Davis study assumes as much, as it later uses JIF as the multiplier factor in calculating the cost per extra citation for author-choice OA (see below).

Analyses by journal JIF citation-bracket, for example, can provide estimates of QA (Quality Advantage) if the OA Advantage is bigger in the higher journal citation-brackets. (Davis’s study is preoccupied with the self-selection QB bias, which it does not and cannot test, but it fails to test other candidate contributors to the OA Advantage that it can test.)

(An important and often overlooked logical point should also be noted about the correlates of citations and the direction of causation: The many predictor variables in the multiple regression equations predict not only the OA citation Advantage; they also predict citation counts themselves. It does not necessarily follow from the fact that, say, longer articles are more likely to be cited that article length is therefore an artifact that must be factored out of citation counts in order to get a more valid estimate of how accurately citations measure quality. One possibility is that length is indeed an artifact. But the other possibility is that length is a valid causal factor in quality! If length is indeed an artifact, then longer articles are being cited more just because they are longer, rather than because they are better, and this length bias needs to be subtracted out of citation counts as measures of quality. But if the extra length is a causal contributor to what makes the better articles better, then subtracting out the length effect simply serves to make citation counts a blunter, not a sharper instrument for measuring quality. The same reasoning applies to some of the other correlates of citation counts, as well as their relation to the OA citation Advantage. Systematically removing them all, even if they are not artifactual, systematically divests citation counts of their potential power to predict quality. This is another reason why citation counts need to be systematically validated against other evaluative measures [Harnad 2008a].)

“Because we may lack the statistical power to detect small significant differences for individual journals, we also analyze our data on an aggregate level”It is a reasonable, valid strategy, to analyze across journals. Yet this study still persists in drawing individual-journal level conclusions, despite having indicated (correctly) that its sample may be too small to have the power to detect individual-journal level differences (see below).

(On the other hand, it is not clear whether all the OA/non-OA citation comparisons were always within-journal, within-year, as they ought to be; no data are presented for the percentage of OA articles per year, per journal. OA/non-OA comparisons must always be within-journal/year comparisons, to be sure to compare like with like.)

“The first model includes all 11 journals, and the second omits the Proceedings of the National Academy of Sciences (PNAS), considering that it contributed nearly one-third (32%) of all articles in our dataset”Is this a justification for excluding PNAS? Not only was the analysis done with and without PNAS, but, unlike all the other journals, whose data were all included, for the entire time-span, PNAS data were only included from the first and last six months.

Why? PNAS is a very high impact factor journal, with highly cited articles. A study of PNAS alone, with its much bigger sample size, would be instructive in itself – and would almost certainly yield a bigger OA Advantage than the one derived from averaging across all 11 journals (and reducing the PNAS sample size, or excluding PNAS altogether).

There can be a QB difference between PNAS and non-PNAS articles (and authors), to be sure, because PNAS publishes articles of higher quality. But a within-PNAS year-by-year comparison of OA and non-OA that yielded a bigger OA Advantage than a within-journal OA/non-OA comparison for lower-quality journals would also reflect the contribution of QA. (With these data in hand, the author should not be so focused on confirming his hypotheses: take the opportunity to falsify them too!)

“we are able to control for variables that are well-known to predict future citations [but] we cannot control for the quality of an article”This is correct. One cannot control for the quality of an article; but in comparing within a journal/year, one can compare the size of the OA Advantage for higher and lower impact journals; if the advantage is higher for higher-impact journals, that favors QA over QB.

One can also take target OA and non-OA articles (within each citation bracket), and match the title words of each target article with other articles (in the same journal/year):

If one examines N-citation OA articles and N-citation non-OA articles, are their title-word-matched (non-OA) control articles equally likely to have N or more citations? Or are the word-matched control articles for N-citation OA articles less likely to have N or more citations than the controls for N-citation non-OA articles (which would imply that the OA has raised the OA article’s citation bracket)? And would this effect be greater in the higher citation brackets than in the lower ones (N = 1 to N = >16)?

If one is resourceful, there are ways to control, or at least triangulate on quality indirectly.

“spending a fee to make one’s article freely available from a publisher’s website may indicate there is something qualitatively different [about that article]”Yes, but one could probably tell a Just-So story either way about the direction of that difference: paying for OA because one thinks one's article is better, or paying for OA because one thinks one's article worse! Moreover, this is AC-OA, which costs money; the stakes are different with SA-OA, which only costs a few keystrokes. But this analysis omitted to identify or measure SA-OA.

“RESULTS…The difference in citations between open access and subscription-based articles is small and non-significant for the majority of the journals under investigation”(1) Compare the above with what is stated earlier: “Because we may lack the statistical power to detect small significant differences for individual journals, we also analyze our data on an aggregate level.”

(2) Davis found an OA Advantage across the entire sample of 11 journals, whereas the individual journal samples were too small. Why state this as if it were some sort of an empirical effect?

“where only time and open access status are the model predictors, five of the eleven journals show positive and significant open access effects.”(That does not sound too bad, considering that the individual journal samples were small and hence lacked the statistical power to detect small significant differences, and that the PNAS sample was made deliberately small!)

“Analyzing all journals together, we report a small but significant increase in article citations of 21%.”Whether that OA Advantage is small or big remains to be seen. The bigger published OA Advantages have been reported on the basis of bigger samples.

“Much of this citation increase can be explained by the influence of one journal, PNAS. When this journal is removed from the analysis, the citation difference reduces to 14%.”This reasoning can appeal only if one has a confirmation bias: PNAS is also the journal with the biggest sample (of which only a fraction was used); and it is also the highest impact journal of the 11 sampled, hence the most likely to show benefits from a Quality Advantage (QA) that generates more citations for higher citation-bracket articles. If the objective had not been to demonstrate that there is little or no OA Advantage (and that what little there is is just due to QB), PNAS would have been analyzed more closely and fully, rather than being minimized and excluded.

“When other explanatory predictors of citations (number of authors, pages, section, etc.) are included in the full model, only two of the eleven journals show positive and significant open access effects. Analyzing all journals together, we estimate a 17% citation advantage, which reduces to 11% if we exclude PNAS.”In other words partialling out 5 more correlated variables from this sample reduces the residual OA Advantage by 4%. And excluding the biggest, highest-quality journal’s data, reduces it still further.

If there were not this strong confirmation bent on the author’s part, the data would be treated in a rather different way: The fact that a journal with a bigger sample enhances the OA Advantage would be treated as a plus rather than a minus, suggesting that still bigger samples might have the power to detect still bigger OA Advantages. And the fact that PNAS is a higher quality journal would also be the basis for looking more closely at the role of the Quality Advantage (QA). (With less of a confirmation bent, OA Self-archiving, too, would have been controlled for, instead of being credited to non-OA.)